Welcome to the Active Perception Group

Combining computer vision, deep learning and AI (Generative AI) algorithms to interpret peoples’ actions and the scene’s geometry.

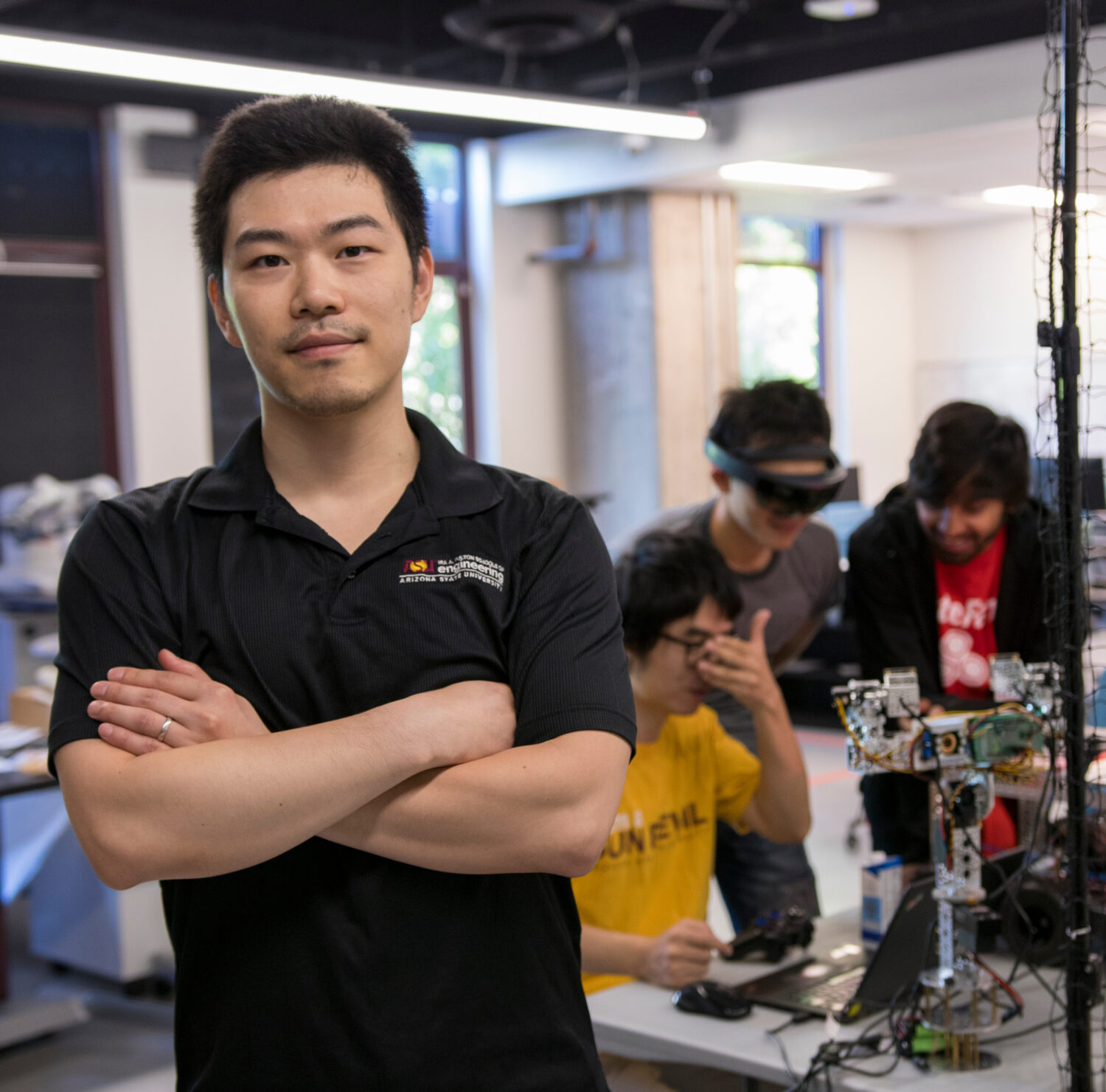

Yezhou (also goes by ‘YZ’) Yang is an Associate Professor at School of Computing and Augmented Intelligence (SCAI), Arizona State University. He is directing the ASU Active Perception Group.

For the latest research, teaching, and entrepreneurial updates, please refer to the following: LinkedIn – Google Scholar – X (Twitter)

His primary interests lie in Cognitive Robotics, Computer Vision, and Robot Vision, especially exploring visual primitives in human action understanding from visual input, grounding them by natural language as well as high-level reasoning over the primitives for intelligent robots; His research mainly focused on solutions to visual learning, which significantly reduces the time to program intelligent agents. These solutions involve Computer Vision, Deep Learning, and AI algorithms to interpret peoples’ actions and the scene’s geometry. His research draws on the strengths of the symbolic approach, connectionism, and dynamicism.

Before joining ASU, Dr. Yang was a Postdoctoral Research Associate at the Computer Vision Lab and the Perception and Robotics Lab, with the University of Maryland Institute for Advanced Computer Studies. He got his PhD from the Computer Science department of Univeristy of Maryland, with a Ph.D. thesis on MANIPULATION ACTION UNDERSTANDING FOR OBSERVATION AND EXECUTION.

A few words to future ASU APGers

For future Ph.D. members, our admission is committee based and we have a large AI-related faculty group at ASU. I would encourage you to apply for our CS/CSE/CEN Ph.D. program first. If you are interested in working with ASU APG, you could consider listing my name as your potential Ph.D. advisor. For future Master members, I typically recruit Master students from my CSE 598 Perception in Robotics course, please consider taking it first. All the best with your applications to graduate schools!

‘YZ’ Yezhou Yang

Twitter: @Prof_YZ

Associate Professor, School of Computing and AI, ASU

Fulton Entrepreneurial Professor

Thrust Lead, Situation Awareness, ACT, Science and Technology Centers

Tech Lead, Situation Awareness, Institute of Automated Mobility

Co-founder, ARGOS Vision Inc.

Contact

Office:

BYENG 562

699 S Mill Ave.

Tempe, AZ 85281

Email: [email protected]

Full Publication List

Latest News

ASU APG will present 4 main conference papers at CVPR 2024. More info .

Yezhou “YZ” Yang discussed the safety of autonomous vehicles with Arizona PBS. Watch the interview here .

YZ serves as Area Chair for CVPR 2024, AAAI 2024, NeuRIPS 2023 and ICLR 2023, and Associate Editor for ICRA 2024, 2023.

ICRA 2023: “CAROM Air – Vehicle Localization and Traffic Scene Reconstruction from Aerial Video” has been accepted and will be presented at the 2023 IEEE International Conference on Robotics and Automation (ICRA) Full draft and Data and code This work features our last two years close collaboration between ASU APG, Rider University, Arizona DOT and the Institute of Automated Mobility .

YZ co-organizes WACV 2023 Tutorial on Semantic Data Engineering for Robustness under Multimodal Settings (SERUM). Tutorial Webpage

YZ co-organizes CoRL 2022 Workshop on Learning, Perception, and Abstraction for Long-Horizon Planning. Workshop Webpage ASU APG

YZ Yang presents APG’s effort towards Robust and Socially-Adept Autonomous Vehicles Through Vehicle Trajectory Sensing for Safety Assessment at the University Research session of ITS AZ conference, mesa, AZ Slides deck

ASU APG received a three years NSF Robust Intelligence core research grant (collaborating with Prof. Chitta Baral) to develop An Active Approach for Data Engineering to Improve Vision-Language Tasks. Project public info

ASU APG received a three years NSF SaTC research grant (With Prof. Max Yi Ren and Prof. Ni Trieu) to develop Decentralized Attribution and Secure Training of Generative Models. Project public info

Pratyay Banerjee and APG PhD Tejas Gokhale: “Weakly Supervised Relative Spatial Reasoning for Visual Question Answering” has been accepted and will be presented at 2021 International Conference on Computer Vision (ICCV’21) Full draft and Data and code

APG PhD Xin Ye: “Hierarchical and Partially Observable Goal-driven Policy Learning with Goals Relational Graph” has been accepted and will be presented at 2021 Conference on Computer Vision and Pattern Recognition (CVPR’21) Full draft and Data and code

APG PhD Joshua Feinglass: “SMURF: SeMantic and linguistic UndeRstanding Fusion for Caption Evaluation via Typicality Analysis” has been accepted and will be presented at the annual meeting of the Association for Computational Linguistics (ACL) 2021. Long paper as Oral presentation. Full draft and Data and code APG PhD Xin Ye: “Efficient Robotic Object Search via HIEM: Hierarchical Policy Learning with Intrinsic-Extrinsic Modeling” has been accepted to RA-L and will be presented at IEEE International Conference on Robotics and Automation (ICRA’21) Full draft and Data and code

ASU CS PhD Duo Lv and APG PhD Varun Jammula: “CAROM – Vehicle Localization and Traffic Scene Reconstruction from Monocular Cameras on Road Infrastructures” has been accepted and will be presented at IEEE International Conference on Robotics and Automation (ICRA’21) Full draft and Data and code

ASU APG Yezhou Yang presents APG’s effort on Visual Recognition beyond Appearances, and its Robotic Applications at the USC ISI NLP seminar on Jan 14th, 2020 Full talk and Q&A

APG 2 Papers accepted at ICLR 2021! Changhoon Kim: DECENTRALIZED ATTRIBUTION OF GENERATIVE MODELS Jacob Zhiyuan Fang: and SEED: Self-supervised Distillation For Visual Representation

ASU APG Yezhou Yang served as Area Chair for AAAI 2021 ASU APG Yezhou Yang presents APG’s effort on Visual Recognition beyond Appearances, and its Robotic Applications at the ONLINE Special Robotics Seminar, Maryland Robotics Center on December 4, 2020 Full talk and Q&A

APG 3 V&L Long Papers accepted at EMNLP 2020 (Two Accepts and one Accept-Finding). Video2Commonsense: Generating Commonsense Descriptions to Enrich Video Captioning and MUTANT: A Training Paradigm for Out-of-Distribution Generalization in Visual Question Answering and Diverse Visuo-Lingustic Question Answering (DVLQA) Challenge

ASU APG Yezhou presents APG’s effort on Towards Robust and Socially-Adept Autonomous Vehicles and Vehicle Trajectory Sensing using Existing Monocular Traffic Cameras for Safety Assessment at the WORKSHOP ON AUTOMATED VEHICLE SAFETY: VERIFICATION, VALIDATION AND TRANSPARENCY collocated with IEEE ITSC 2020 Program info

ASU APG received a three years NSF Cyber-Physical Systems (CPS) research grant (With Prof. Georgios Fainekos and Prof. Jyo Deshmukh (USC)) to develop Spatio-Temporal Logics for Analyzing and Querying Perception Systems. Project public info

ASU APG Yezhou presents APG’s effort on Vehicle Trajectory Sensing using Existing Monocular Traffic Cameras for Safety Assessment at the Automated Vehicles Symposium (AVS) breakout session on Safety Assurance of Automated Driving Program info

APG PhD Tejas Gokhale: “VQA-LOL: Visual Question Answering under the Lens of Logic” has been accepted and will be presented at 2020 European Conference on Computer Vision (ECCV’20) Full draft and Data and code

APG Visiting PhD Zhe Wang and PhD Jacob Zhiyuan Fang: “ViTAA: Visual-Textual Attributes Alignment in Person Search by Natural Language” has been accepted and will be presented at 2020 European Conference on Computer Vision (ECCV’20) Full draft and Data and code

APG master thesis student Kausic Gunasekar: “Low to High Dimensional Modality Hallucination using Aggregated Fields of View for Robust Perception” has been accepted and will be published on Robotics and Automation Letter (RA-L) with a presentation at ICRA 2020 Full draft and Data and Code .

APG PhD candidate Mohammad Farhadi: “TKD: Temporal Knowledge Distillation for Active Perception” has been accepted and will be presented at 2020 Winter Conference on Applications of Computer Vision (WACV ’20) Full draft and Data and code

ASU APG will participate the Darpa KAIROS program (With USC ISI and UCF teams) to develop AI system for discovering schemas from diverse data. Program public info ASU APG received a three years NSF National Robotics Initiative (NRI) grant (With Prof. Wenlong Zhang and Prof. Yi Ren) to develop Socially-Adept Autonomous Vehicles using active vision and reasoning. Project public info

ASU APG received a one year NSF CCRI grant (With Prof. Dijiang Huang ) to develop hand related critical research data services. Project public info ASU APG won the Amazon AWS ML research award 2019! Award info

APG PhD student Xin Ye: “GAPLE: Generalizable Approaching Policy LEarning for Robotic Object Searching in Indoor Environment” has been accepted and will be published on Robotics and Automation Letter (RA-L) with a presentation at IROS 2019 Full draft Somak Aditya: “Integrating Knowledge and Reasoning in Image Understanding” has been accepted and will be presented at IJCAI 2019 survey track. Full draft

APG PhD student Xin Ye: “Robot Learning of Manipulation Activities with Overall Planning through Precedence Graph” has been accepted and will be published on the Special Issue on Semantic Policy and Action Representations for Autonomous Robots, with the Journal of Robotics and Autonomous Systems.

APG PhD student Jacob Zhiyuan Fang: “Modularized Textual Grounding for Counterfactual Resilience” has been accepted to present at Conference on Computer Vision and Pattern Recognition 2019. paper Acceptance rate: 25% Collaborative work with ASU SEMTE and Polytech schools: “How Shall I Drive? Interaction Modeling and Motion Planning towards Empathetic and Socially-Graceful Driving” has been accepted to present at the 2019 International Conference on Robotics and Automation (ICRA). paper

Rudra Saha: “Spatial Knowledge Distillation to aid Visual Reasoning” has been accepted to present at WACV 2019. paper

ASU APG 2018 summer vision and language SIG members Chia-yu Hsu and Siyu Zhou won the first AI world cup AI commentator competition and 5k$ prize! Congratulations! ASU Now news

Xin Ye and Shibin Zheng: “Active Object Perceiver: Recognition-guided Policy Learning for Object Searching on Mobile Robots” has been accepted to present at 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018). paper and video demo

ASU APG received a three years ONR grant (With Prof. Hasan Davulcu’s group and Prof. Adam Cohen’s group) to conduct fundamental research on visually predicting and influencing individual and group attitudes and behaviors.

ASU APG received a three years NSF RI small grant (With Prof. Chitta Baral’s group) to develop the THE-QA (Technical, Hard and Explainable Question Answering) Challenge. Project public info Somak Aditya has successfully defended his PhD thesis and going to join Adobe Research. Congratulations Somak!

Mo Izady “A Weakly-Supervised Learning-Based Feature Localization in Confocal Laser Endomicroscopy Glioma Images” has been accepted for presentation at the 21st INTERNATIONAL CONFERENCE ON MEDICAL IMAGE COMPUTING & COMPUTER ASSISTED INTERVENTION (MICCAI 2018). (~35% acceptance rate)

Somak Aditya: “Combining Knowledge and Reasoning through Probabilistic Soft Logic for Image Puzzle Solving” has been accepted for presentation at the 34th Conference on Uncertainty in Artificial Intelligence (UAI 2018). (30% acceptance rate)

ASU APG (Together with Dr. Baral and Dr. Tugara) received an equipment fund for an 8xV100 deep learning DevBox from Ira. A. Fulton schools of engineering!

ASU APG (Together with Dr. Wenlong Zhang and Dr. Yi Ren) received CASCADE seed award for pilot study on “Towards Socially Graceful Autonomous Vehicles via Data-driven Modeling and Optimization” from the CASCADE center

One workshop is accepted for the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2018) which is among the flagship conferences in the field of robotics. Eren Erdal Aksoy, Yezhou Yang, Karinne Ramirez-Amaro, Neil Dantam, Gordon Cheng: Semantic Policy and Action Representations for Autonomous Robots Conference: 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems

Mo Izady: “Convolutional Neural Networks: Ensemble Modeling, Fine-Tuning and Unsupervised Semantic Localization for Neurosurgical CLE Images” has been accepted for publication at the Journal of Visual Communication and Image Representation (JVCI).

Our First Workshop on Induce and Deduce: Integrating learning of representations and models with deductive, explainable reasoning that leverages knowledge is accepted at KR 16th International Conference on Principles of Knowledge Representation and Reasoning . More info coming soon.

“Extrinsic Dexterity through Active Slip Control using Deep Predictive Models”, a work which is initiated from a class project of the CSE 591 Perception in Robotics course, and a collaboration with the Interactive Robotics Lab at ASU, has been accepted to appear in ICRA 2018. Congratulations to Simon!

Yezhou Yang receives the five-year NSF Faculty Early Career Development (CAREER) Award to address the problem of Visual Recognition with Knowledge (VR-K). Project public info Yezhou Yang receives one of the awards of the 2018 round of the inaugural Global Sports Institute (GSI) “Sports 2036” Grant Program. GSI and Adidas ; The winning topic: Non-invasive Performance Tracking with Smart Cameras.

Mohammad Farhadi receives ASU Graduate and Professional Student Association (GPSA) Individual Travel Grant and AAAI student volunteer scholarship to attend AAAI 2018.

Somak Aditya: “Image Understanding using Vision and Reasoning through Scene Description Graph “ has been accepted for publication in Journal of Computer Vision and Image Understanding (CVIU).

Somak Aditya: “Explicit Reasoning over End-to-End Neural Architectures for Visual Question Answering “ has been accepted for presentation at the Thirty-Second AAAI Conference on Artificial Intelligence (AAAI-18).

Xin Ye: “Collision-free Trajectory Planning in Human-robot Interaction through Hand Movement Prediction from Vision” has been accepted at 2017 Humanoids conference.

Yezhou: is invited to present a research poster at the Computing Community Consortium (CCC) Symposium on Computing Research Addressing National Priorities and Societal Needs in Washington, DC on October 23-24, Yezhou: Tech talk “Vision-Language integration challenges and needs in Robotics” at the 3rd integrating Vision and Language training school, Sep 5th, Athens, Greece.

Yezhou: Tech talk “Active Perception Beyond Appearance, and its Robotic Applications” at the Brain team, Google Inc.

Yezhou: Co-organizing workshop 2nd Workshop on Semantic Policy and Action Representations for Autonomous Robots (SPAR) September 24th 2017 as part of the IROS 2017 conference in Vancouver, Canada.

Yezhou: Invited talk “Active Perception Beyond Appearance, and its Robotic Applications” at the Center for Vision, Cognition, Learning and Autonomy, University of California Los Angeles.

Somak Aditya Received the University Graduate Fellowship for the Spring 2017 semester. Congratulations!

Stephen McAleer has been selected as a Bidstrup Undergraduate Fellow for Spring 2017. Congratulations!

Yezhou Yang Received the KEEN Professorship award for Spring Semester 2017 The KEEN Professorship

“Prediction of Manipulation Actions” has been accepted for publication on International Journal of Computer Vision (IJCV) preprint draft

Three papers are accepted to appear at 2017 International Conference on Robotics and Automation (ICRA). “What Can I Do Around Here? Deep Functional Scene Understanding for Cognitive Robot”.

“Fast Task-Specific Target Detection via Graph Based Constraints Representation and Checking”;

and “Unsupervised Linking of Visual Features to Textual Descriptions in Long Manipulation Activities” is accepted together with RAL. NEW Manuscript “Answering Image Riddles using Vision and Reasoning through Probabilistic Soft Logic” ; Full dataset and related materials.

NEW !!! “Co-active Learning to Adapt Humanoid Movement for Manipulation” is accepted at IEEE-RAS International Conference on Humanoid Robots (Humanoids) 2016.

Lightnet package and report is accepted at the ACMMM open source software competition 2016. LightNet: A Versatile, Standalone Matlab-based Environment for Deep Learning and Technical report and git: https://github.com/yechengxi/LightNet.git . NEW !!! One paper is accepted at European Conference on Computer Vision (ECCV) 2016. . Check out our newly posted manuscript: From Images to Sentences through Scene Description Graphs using Commonsense Reasoning and Knowledge. For more information please refer to webpage for data and demonstration of SDGs. Conference version appears at Advances in Cognitive Systems 2016: DeepIU: An Architecture for Image Understanding.